I’ve been experimenting with local AI models on my Mac Mini M4, and kept running into memory constraints when loading larger language models. The unified memory architecture on Apple Silicon is brilliant, but macOS’s automatic GPU memory management doesn’t always prioritize what I need. That’s when I discovered VRAM Pro.

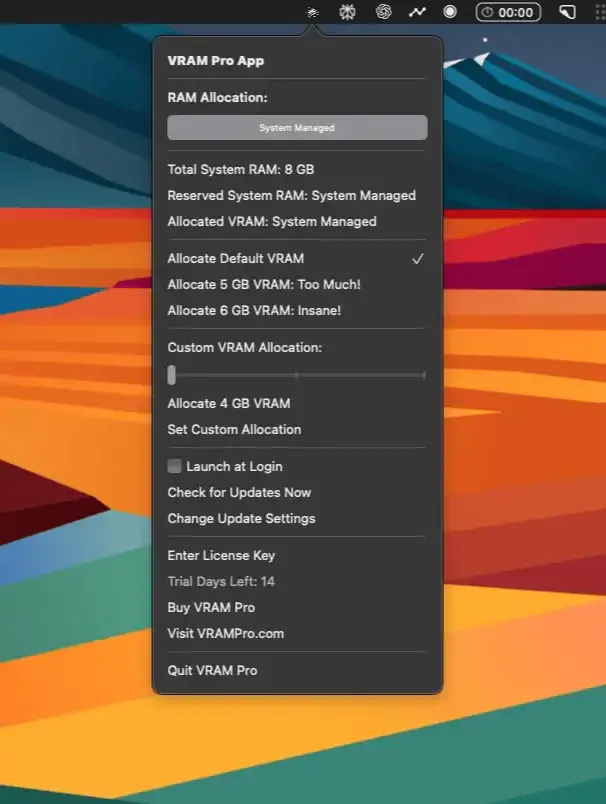

The first thing to understand is what this app actually does. It doesn’t magically create more GPU memory - it reallocates unified memory from your system RAM pool to GPU tasks. On my Mac Mini M4 with 32GB of RAM, I can dedicate up to 30GB to GPU work instead of letting macOS decide. You could accomplish this through terminal commands, but VRAM Pro provides a clean graphical interface with smart presets that make the process straightforward.

Using the app on my M2 MacBook Air with 16GB unified memory, I can allocate up to 14GB for GPU-intensive tasks. The interface displays your current VRAM status and offers preset configurations for different use cases - AI inference, video editing, 3D rendering, or custom allocations. Changes require administrator approval with hash validation for security, which feels appropriate for system-level modifications.

The app particularly shines for specific workflows. When working with local language models through Ollama or LM Studio, having predictable GPU memory allocation prevents the frustrating mid-inference crashes I used to experience. Video editors working with DaVinci Resolve or Final Cut Pro can dedicate more memory to GPU acceleration. For 3D work in Blender, the consistent memory allocation improves render times.

Performance impact is minimal - the app itself uses negligible resources since it’s just adjusting system parameters rather than actively monitoring or managing memory. The changes persist across reboots until you modify them again. You can restore default macOS memory management anytime through the app.

One limitation to understand: this is purely for Apple Silicon Macs. Intel Macs with discrete GPUs won’t benefit from this approach since they have dedicated VRAM already. Also, the maximum allocable memory scales with your total RAM - an 8GB Mac can only allocate up to 6GB to GPU tasks, which might not be enough for serious AI or creative work.

I tested this over two weeks on both my M2 MacBook Air and Mac Mini M4. For general productivity work, the default macOS memory management works fine. But when I’m running local AI models or processing 4K video, having manual control over GPU memory allocation makes a real difference. Loading a 13B parameter model that previously crashed now runs smoothly with proper memory allocation.

VRAM Pro offers a free trial version and paid licensing through Gumroad. Exact pricing isn’t listed on the website, but the trial lets you evaluate whether this addresses your specific workflow needs. The developer is responsive to feedback and regularly updates the app.

For most Mac users, this is unnecessary - macOS handles memory well for typical tasks. But if you’re running local AI models, working with high-resolution video, or doing serious 3D rendering on Apple Silicon, having manual GPU memory control transforms what’s possible on your Mac. The alternative is either terminal commands or accepting macOS’s automatic decisions, neither of which is ideal when you need consistent, predictable performance for GPU-intensive work.